How do Large Language Models “think”?

Recently I’ve been experimenting with large language models (LLMs), looking into their mathematical reasoning capability. Moderns LLMs like ChatGPT sometimes show remarkable abilities to solve maths problems. Of all the impressive things LLMs can do, mathematics stands out as being especially abstract and complex. However, there’s been some skepticism about whether LLMs can answer problems in general or if instead they just memorise specific answers. The reality is complicated, and there seems to be a blurry line between these two possibilities.

Transformer Architecture

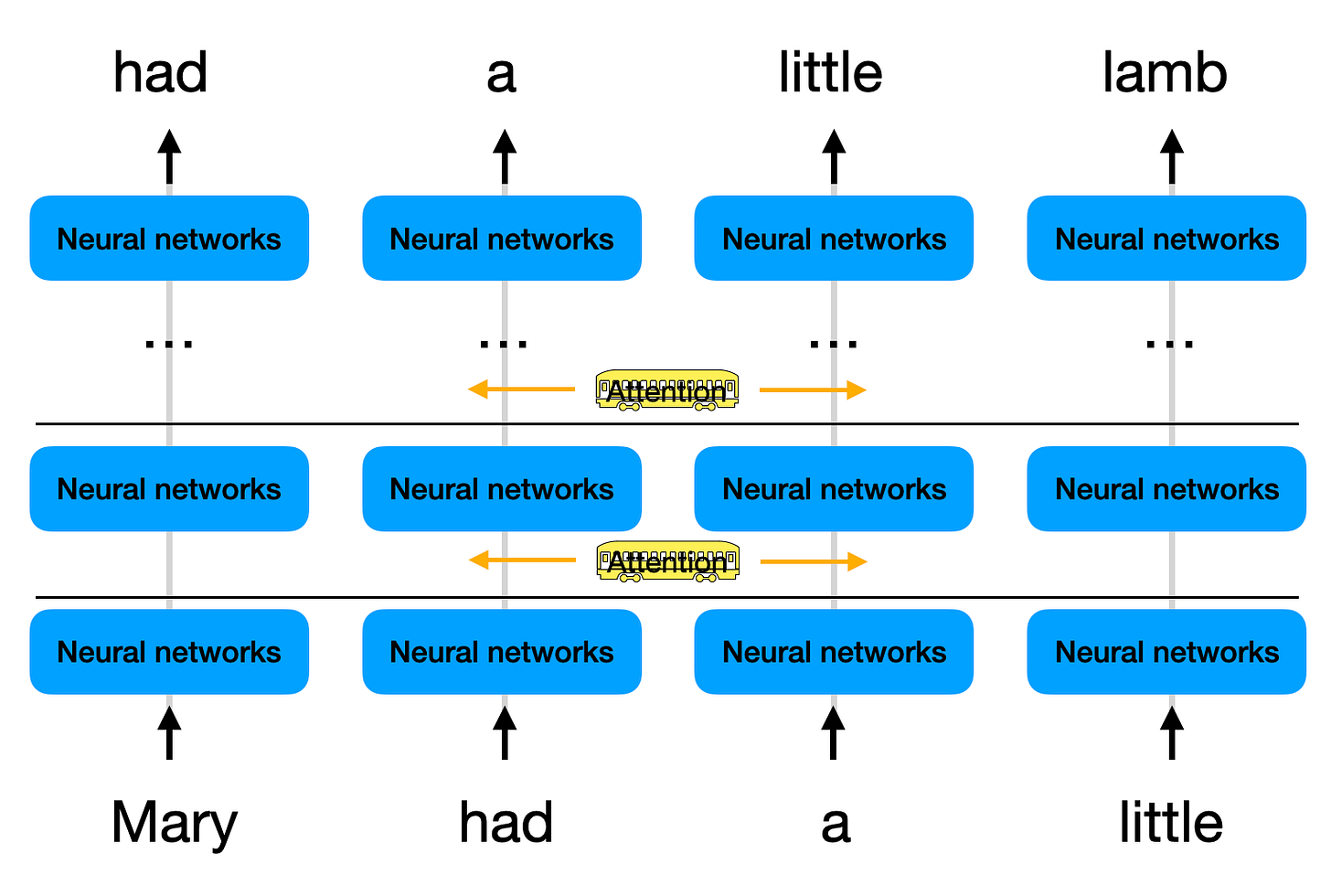

To get some idea of how these models think, we can look at the architecture. Modern large language models predominantly use transformer decoder neural networks. The input is some text, broken up into tokens (usually a word or symbol each), which the model adds to by repeatedly predicting what the next token should be.

The input goes through several layers of neural networks. At each layer, per-token information is transformed in parallel and the results are cross-referenced against each other, before being further processed. This means, when interpreting a word, the model can detect relevant words nearby - e.g. “share”, “agreement” or “carnival” near the word “fair”. Similar words have similar reference information, so the model can look for general patterns of words. Information about detected patterns is further cross-referenced at the next layer, letting the model detect patterns of patterns, and so on, into more abstraction. The model uses this information when predicting the next token, and must attempt to continue the existing patterns in a consistent way. When LLMs generate text, they can take into account highly subtle information, such as meanings of sentences, topics of paragraphs, writing style, mood, tone, emotion, character motivations - and mathematical reasoning.

Chain of Thought Reasoning

When giving short answers, LLM calculations have limited generalisation. Recent research shows that LLMs do better at reasoning related tasks if they are asked to explain their answer first (called chain of thought prompting). Transformers don’t have any internal memory, but their output tokens get added to their input every step. The explanations have partial solutions, so the problem gets broken into multiple easier steps for the model. Here’s an example:

Substitutions

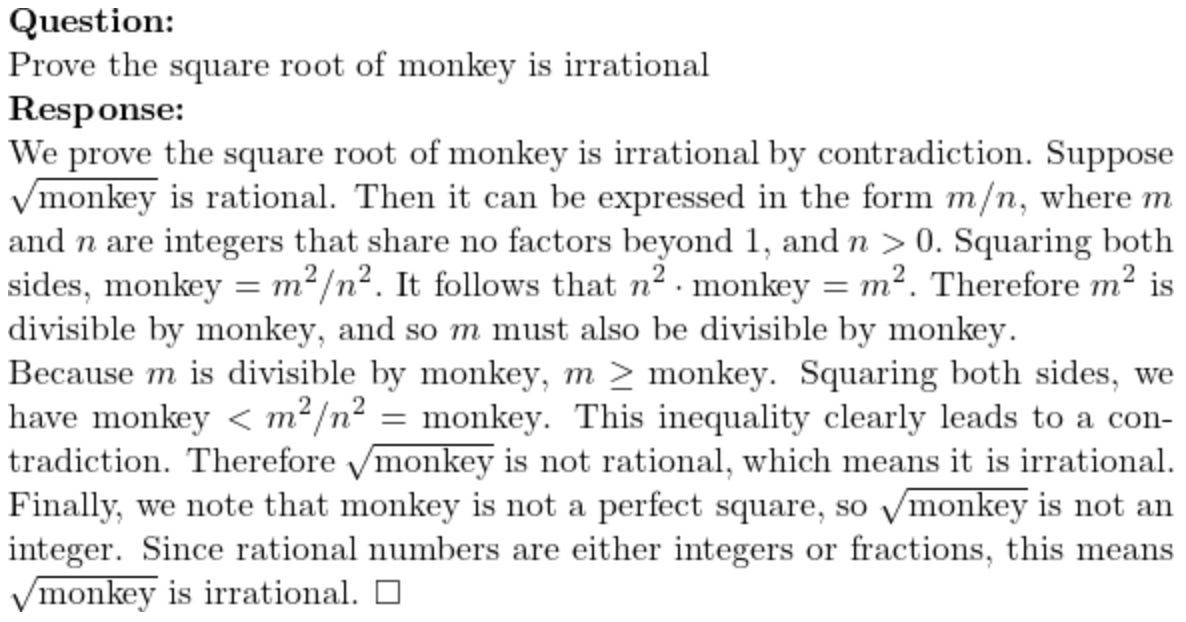

The act of breaking down a problem into smaller parts seems to demonstrate impressive reasoning skills. However, this behaviour is not entirely related to problem solving. LLMs do this when trained to generate natural looking text, and are just trying to copy humans. People write out text to help them do maths, there are patterns in this behaviour that the LLM can learn, and this allows for generalisation. For example, I found that a GPT-3.5 instance was often able to correctly prove irrationality for a wide range of square roots:

These could have been memorised, but that seems less likely for large numbers like:

These proofs are very similar to well known results, such as that for √2 or √p for general prime p, that are likely to be in the model training data. When asked to prove that √5 is irrational, the model included “An integer is even if and only if its square is even”, which is not relevant at all, but would be for √2. It seems likely that when answering these questions, the LLM is substituting the requested value into a learned pattern.

It’s not a fixed template though, as there’s variation in the words used and some additional explanations can be given or left out. Also, it’s not just pure substitution, some transformations are required. Above, 144 was used for 12 squared and 841 for 29 squared. These transformed substitutions are intermediary calculations. This kind of behaviour allows the LLM to break a problem into steps, essentially by following a script.

When asked silly questions, the model can (when it doesn’t complain) apply a proof-like pattern to nonsense:

A similar thing happened with other nonsense questions, e.g.:

In the nonsense examples, some responses contained nonsensical transformations. For the monkey question, one response talked of a “gorilla equation”. Another question asked “use Jensen’s inequality to prove that the measure of a human is more than the sum of their parts”, and difference answers interpreted “measure” to imply things like height and weight. One lengthy answer discussed Jensens inequality before diverting to talk about food processing, livestock and “sheep inequality”, “fish inequality” and “jellyfish inequality”.

The ability to follow a pattern, using transformations to fill in blanks, can be seen in LLMs in other areas, such as poetry:

Here, the transformations are about finding parallels and similar associations. So, the way LLMs solve maths problems actually seems similar to metaphorical thinking. It’s also similar to how LLMs hallucinate, filling in a familiar pattern of text with a new concept, doing transformations and substitutions to come up with something misleadingly plausible.

Patterns of Patterns

While exploring LLM math reasoning abilities, I fine tuned GPT-3.5-turbo on two different kinds of data, one of which was solutions from a popular maths dataset (MATH), the other was a set of much more long-winded answers that I wrote myself. Fine tuning on MATH improved the test results, but fine tuning on my data, in some cases, actually made the model worse. I wondered if adopting an unfamiliar style had made it harder for the model to apply the patterns it knew. To find out, I tried fine-tuning the model on a totally alien style of data - MATH solutions with the word order reversed in every sentence. The resulting model adopted the reverse-order style for its output. When tested, it was ~10-15% worse at maths problems than before fine-tuning. This suggests LLMs “reasoning” is sensitive to being pushed out of familiar territory.

However, this doesn’t mean that LLMs can only solve problems by copying almost identical answers from their training data. For example:

Here the model makes a mistake early on, by looking at divisibility by 3, it doesn’t find a contradiction (since 18 is divisible by 3 squared). However, it recovers by repeating the same reasoning for divisibility by 2, which allows it to progress. Repeating that part of the proof pattern lead to a correct result.

I tried asking the model to solve some questions that were made of two different problems combined, so that the answer to one became part of the other. The model was able to solve about a third of these, by chaining together solutions, substituting in the answer from the first into the second.

The model even showed some evidence of combining multiple smaller patterns to follow a larger pattern, as, for example, in this response:

This describes its own overarching structure, explicitly calling out the beginning, substitution step and eventual end result.

Mathematical work is usually made up of a series of familiar steps, sometimes combined in a novel way to get new results. So, being able to mix and match patterns of reasoning, and follow higher level patterns, is potentially very powerful.

What’s Missing?

While we can see LLMs solve problems in ways that can generalise, that doesn’t resolve the question of whether they are “memorising” or “reasoning”. An LLM can prove many square roots are irrational, but if it’s not doing anything much more complicated than search→ replace, is that “reasoning”? It depends on the definition, and like “intelligence” itself, there’s no agreement on what “reasoning” means.

LLM “reasoning” abilities are reminiscent of intuitive (or “fast”) thinking in humans. When I solve maths problems, familiarity with the problem type helps, and often it subjectively feels like I start with a hazy pattern in mind for the solution, which I then flesh out. It only looks like a logical progression from question to answer when it is finished. (The same probably applies to everyday life, where many of the things we think and say follow common patterns, but seem rational in retrospect.)

Not all problems can be solved with this kind of thinking. Some have to be broken down into discrete options and explored methodically. That is the only way to find new patterns of reasoning. LLMs are not well suited for this. For the LLM, the only persistent state is the output text, so any exploration process would have to be written down. The LLM would have to be trained on data that included descriptions of trying wrong approaches, identifying dead-ends, verifying results and fixing mistakes. This does not seem promising, given how fine-tuning on long explanations gave such poor results. Also, even if an LLM could be trained to explore, it wouldn’t be able learn from doing so without external help.

An interesting potential solution is to integrate LLMs with other AI systems that can take care of exploration and new learning. This was the approach in Voyager, an AI agent that plays Minecraft. Voyager uses LLMs to generate goals and then generate programs which play the game to achieve them. If successful, the code is stored in a memory bank of skills, which can be drawn on to help achieve future goals. DeepMind have been applying similar ideas in specific areas of maths with AlphaGeometry and FunSearch, where they combine exploration systems with deep learning or large language models. Both of those systems have tools to verify the output of the models and guide the exploration. AlphaGeometry uses a specialised geometry theorem checker, and FunSearch uses function evaluation. More general theorem checking systems exist, like LEAN, which can verify all kinds of mathematics. LLMs can convert maths into LEAN, so DeepMind’s Alpha could plausibly use formal verification to explore general mathematics results. This would be an exciting combination, if it could be made to work, without getting lost in the vast span of possible directions to explore.

Instead of relying on external tools for verification, it is possible that models could be used to check their own outputs. A recent paper from Tencent describes the AlphaLLM system, which uses Alpha style exploration, with LLM steps for defining new problems, generating sub-task solutions and evaluating results. This kind of approach could be very general and extremely powerful. However, it’s worth being cautious about this kind of approach. Systems that train themselves can end up with compounding errors that they can’t detect.

An LLM based general maths solver could have applications well outside mathematics. There’s already huge potential in the ability of large language models to convert between structured and unstructured data. This opens up the possibility of connecting and automating many systems that currently rely on human effort. However, if LLMs could convert between structured and unstructured reasoning, the applications would be even bigger. A big example would be software engineering. When coding, software engineers implicitly create a mental model for their programs. This would cover constraints on inputs and outputs of functions, flows of information and possible program states, and is at heart simple mathematics and logical reasoning. If an AI system could convert documentation, comments and software requirements into mathematics, it could build large applications that it could verify automatically. It would be a big step forward in AI, and maybe a significant disruption to the software industry.